Welcome to the September edition of the TC10 newsletter.

In this issue, you will find call for competition and journal-track papers for ICDAR 2023, a presentation of the New Technologies Show at ECCV. Also the two last IJDAR issues and two new job offers in Barcelona (Spain) and Rouen (France).

I wish you a pleasant reading,

Christophe Rigaud

IAPR-TC10 Communications Officer

Call for contributions: feel free to contribute to TC10 newsletters, by sending any relevant news, event, notice, open position, dataset or link to us on iapr.tc10[at]gmail.com

1) Upcoming deadlines and events

2022

- Deadlines:

- October 28, competition proposition deadline ICDAR 2023

- October 31, 1st round paper submission deadline ICDAR-IJDAR Journal Track

- Events:

- October 16-19, conference ICIP 2022, Bordeaux, France

- October 23-27, conference ECCV 2022, Tel Aviv, Israël

- December 4-7, conference ICFHR 2022, Hyderabad, India

2023 and later

- Deadlines:

- January 15, paper submission deadline ICDAR 2023, San José, California, USA

- Events:

- August 2023, conference ICDAR 2023, San José, California, USA

- September 2024, conference ICDAR 2024, Athens, Greece

2) ICDAR2023 Call for Competitions

The ICDAR2023 Organizing Committee invites proposals for competitions that aim at evaluating the performance of algorithms and methods related to areas of document analysis and recognition.

You are cordially invited to submit a proposal, that should contain the following information:

- Contest title and abstract

- A brief description of the competition, including what the particular task under evaluation is, why this competition is of interest to the ICDAR community, and the expected number of participants

- An outline of the competition schedule

- Description of the dataset to be used, and the evaluation process and metrics for submitted methods

- The names, contact information, and brief CVs of the competition organizers, outlining previous experience in performance evaluation and/or organizing competitions

The following rules shall apply to the accepted Competitions:

- The name of competition must be standardized by starting with “ICDAR 2023” e.g. “ICDAR 2023 Competition on …” or “ICDAR 2023 … Competition.”

- Datasets used in the competitions must be made available after the end of the competitions. Specifically, the training data and ground truth must be publicly released and there must be a way to evaluate performance on a test set. This could take the form of an evaluation server, or the test data, ground truth, and evaluation script could be made publicly available. In principle, the organizers should submit the dataset to IAPR TC10 or TC11.

- Evaluation methodologies and metrics used must be described in detail so that results can be replicated later. Evaluation scripts must be released afterwards.

- Each competition has to be presented with a poster at a prominent place at the conference venue, and selected competitions will get the chance to be presented orally in the dedicated session mentioned above.

- Competitions must have a sufficient number of participants to be able to draw meaningful conclusions.

- Reports (full papers) on each competition will be reviewed and, if accepted (the competition ran according to plan, attracted a minimum level of participation and is appropriately described), will be published in the ICDAR 2023 conference proceedings.

- Participants should not have access to the ground-truthed test dataset until the end of the competition. The evaluation should be done by the organizers.

Submission Guidelines & Inquiries

All proposals should be submitted by electronic mail to the Competition Chairs (Kenny Davila, Dimosthenis Karatzas, and Chris Tensmeyer) via:

competitions-chairs@icdar2023.org

We encourage competitions proposals with a solid plan to remain active and challenging for the community over and above ICDAR 2023

For any inquiries you may have regarding the competitions, please contact us via above email.

Important Dates

October 28, 2022: Competition Proposal Due

November 15, 2022: Competition Acceptance Notification

December 1, 2022: Individual Competition Websites are Live

Mar 20, 2023: Suggested deadline for competition participants

April 10, 2023: Initial Submission of Competition Reports Deadline

May 1, 2023: Camera-Ready Papers Due

July 1, 2023: Communicate Winners to Chairs

August 21-26 2023: Presentation or results at ICDAR Conference

3) ICDAR-IJDAR Journal Track

ICDAR-IJDAR Journal Track Call For Papers of the 17th International Conference on Document Analysis and Recognition August 21-26, 2023 – San José, California, USA

https://www.springer.com/journal/10032/updates/23440814

Following a tradition established at ICDAR 2019 and 2021, ICDAR 2023 will include a journal paper track that offers the rapid turnaround and dissemination times of a conference, while providing the paper length, scientific rigor, and careful review process of an archival journal.

The ICDAR-IJDAR journal track invites high-quality submissions presenting original research in Document Analysis and Recognition. Journal versions of previously published conference papers or survey papers will not be considered for this special issue; such submissions can be submitted as journal-only papers via the regular IJDAR process.

Accepted papers will be published in a special issue of IJDAR, and will receive an oral presentation slot at ICDAR 2023. Authors who submit their work to the journal track commit themselves to present their results at the ICDAR conference if their paper is accepted. IJDAR’s publisher, Springer-Nature, will make accepted papers freely available for four weeks around the conference. After this, accepted papers will be available from the archival journal.

Format: Journal track papers submissions should follow the format of IJDAR submissions and the standard guidelines for the journal.

Procedure and Deadlines: While we permit a second round of submissions for the ICDAR-IJDAR journal track for both new submissions and revised papers from the 1st round of submissions, it must be understood by all authors that the full journal review process will be required for all ICDAR-IJDAR track submissions — there is no “short cut.” Papers submitted late in the second round run a risk of the review process not completing in time. In such cases, authors should know that: (1) The submitted paper can still continue under review as an IJDAR paper. (2) The authors always have the option of preparing a shorter version of the paper to submit as a regular ICDAR conference paper.

Questions regarding the status of a paper that is under review for the IJDAR- ICDAR journal track should be directed to Ms. Katherine Moretti @Springer (katherine.moretti@springer.com). The guest editors have full authority to determine that the journal review process will not complete in time, and to decline submissions that arrive too late. In which case they will immediately notify the authors who can decide to leave the paper in the standard journal reviewing system or withdraw their manuscript from the journal.

Important Dates

October 31, 2022

1st round journal track paper submissions due

January 4, 2023

1st round journal track paper notifications

January 15, 2023

*Regular ICDAR proceedings paper submission deadline

March 15, 2023

2nd round journal track paper submissions due

May 15, 2023

2nd round journal track paper notifications

Special Issue Guest Editors

ICDAR 2023 PC Chairs

– Gernot A. Fink

– Rajiv Jain

– Koichi Kise (liaison to IJDAR)

– Richard Zanibbi

Inquiries

For additional information, please email one of the editors of this special issue or consult the FAQ that will be posted on the IJDAR website.

4) ECCV New Technologies Show

New Technologies Show @ECCV 2022

Do you think that your research idea can generate impact?

Pitch it at ECCV!

Research ideas are the fuel for innovation. ECCV 2022 wants to give the stage to researchers to present not only the scientific virtues of their work, but also their ideas about how their research can lead to meaningful innovation, highlighting possible applications enabled by their research.

ECCV 2022 will host a “New Technologies Show”, where researchers are invited to pitch their technologies to a different crowd. A jury comprising entrepreneurs and innovation experts will give feedback to all participating teams, while the most promising technologies will be offered further mentoring post-ECCV.

All potential participants to the New Technologies Show, will be offered a short (~1-hour) online seminar prior to ECCV, on how to create a successful pitch. Research teams are invited to express their interest and encouraged to attend the online seminar before finally confirming whether they desire to present their technology in the New Technologies Show.

The New Technologies Show session will take place on-site. All participants to the New Technologies Show will be invited to the industry track reception during the ECCV.

Please use the online form to express your interest to participate:

https://forms.gle/vy9fwxaXPj2jC9Ay6

Important Dates:

Expressions of interest: 10/9/2022

Online Course: 20/9/2022

Confirmation of participation: 1/10/2022

New Technologies Show session (on-site): 26/10/2022

Organisers:

Amir Markovitz (Amazon Research), Yair Kittenplon (Amazon Research), Shai Mazor (Amazon Research), Omar Zahr (Tandemlaunch), Dimosthenis Karatzas (Computer Vision Center), Chen Sagiv (SagivTech), Lihi Zelnik-manor (Technion)

For any inquiries, please contact: industrychairs@ecva.net

Supported By:

TandemLaunch Inc.

– TandemLaunch will provide an hour-long online group workshop on preparing an investment narrative based on their invention.

– All applicants will be considered for an invitation into the TandemLaunch Startup Foundry Investment program.

Awards:

– Winners will receive 2 hours of Technology and IP Strategy mentoring and 1 hour of CTO career mentoring with the CTO of TandemLaunch.

5) IJDAR article alert (vol. 25, issue 2&3)

Volume 25, Issue 2, June 2022

https://link.springer.com/journal/10032/volumes-and-issues/25-2

| Segmentation for document layout analysis: not dead yet Logan Markewich, Hao Zhang and Seok-Bum Ko |

| Feature learning and encoding for multi-script writer identification Abdelillah Semma, Yaâcoub Hannad and Mohamed El Youssfi El Kettani |

| Robust text line detection in historical documents: learning and evaluation methods Mélodie Boillet, Christopher Kermorvant and Thierry Paquet |

| Arbitrary-shaped scene text detection with keypoint-based shape representation Shuxin Qin and Lin Chen |

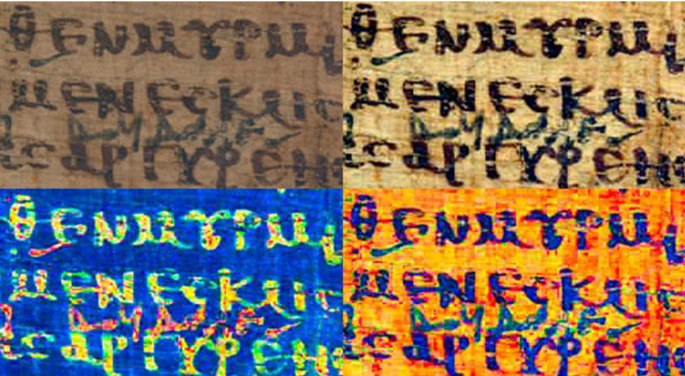

| Personalizing image enhancement for critical visual tasks: improved legibility of papyri using color processing and visual illusions Vlad Atanasiu and Isabelle Marthot-Santaniello – open access Asma Naseer, Sarmad Hussain, Kashif Zafar, Ayesha Khan |

| Correction to: Personalizing image enhancement for critical visual tasks: improved legibility of papyri using color processing and visual illusions Vlad Atanasiu and Isabelle Marthot-Santaniello |

Volume 25, Issue 3, September 2022

https://link.springer.com/journal/10032/volumes-and-issues/25-3

| Scene text detection via decoupled feature pyramid networks Min Liang, Jie-Bo Hou and Jingyan Qin |

| CarveNet: a channel-wise attention-based network for irregular scene text recognition Guibin Wu, Zheng Zhang and Yongping Xiong |

| Fusion of visual representations for multimodal information extraction from unstructured transactional documents Berke Oral and Gülşen Eryiğit |

6) Job offers – 2 new

Postdoctoral Research Position on Document Intelligence and Privacy Preserving Learning – new

The Computer Vision Center (CVC), Barcelona, has a vacancy for a postdoc to work at the frontier between document intelligence and privacy preserving learning.

The position is linked to the European Lighthouse on Secure and Safe AI (ELSA) project https://elsa-ai.eu/, funded by European Union’s Horizon Europe research and innovation programme.

The successful candidate is expected to contribute to the design and development of AI solutions for document understanding, and in particular on Document Visual Question Answering systems, employing privacy preserving techniques and infrastructures set up by the ELSA project.

The candidate is expected to have extensive experience in at least one of: document intelligence and/or private and robust collaborative learning (differential privacy, federated learning), and an appetite to learn.

All applications must include a full CV in English including contact details and a Cover Letter with a statement of interest in English.

Duration: between 30 and 36 months, depending on the start date

Salary: €40,000 annual gross, according to CVC labour categories

More information and application procedure:

https://euraxess.ec.europa.eu/jobs/815190

http://www.cvc.uab.es/blog/2022/07/19/postdoctoral-research-position/

This project has received funding from the European Union’s Horizon Europe research and innovation programme under grant agreement No 101070617. Views and opinions expressed are those of the author(s) only and do not necessarily reflect those of the European Union. Neither the European Union nor the granting authority can be held responsible for them.

Research Engineer Position on Information extraction, Text Recognition in Historical Document Collections – new

LITIS

LITIS (Laboratoire d’Informatique, Traitement de l’information et des Systèmes) is a research

laboratory associated to the University of Rouen Normandie, Le Havre Normandie Normandie,

and School of Engineering INSA Rouen Normandie. Research at LITIS is organized around 7

research teams which contribute to 3 main application domains: Access to Information,

Biomedical Information Processing, Ambient Intelligence. LITIS currently includes 90 faculty staff

members, 50 PhD students, 20 PostDoc and Research Engineers. The Machine Learning team of

LITIS is developing research in modeling unstructured data (signals, images, text, etc…) with

machine learning algorithms and statistical models. For more than two decades it has contributed

to the development of reading systems and document image analysis for various applications such

as postal automation, business document exchange, digital libraries, etc…

EXO-POPP project

Optical Extraction of Handwritten Named Entities for Marriage

Certificates for the Population of Paris (1880–1940)

Thanks to a collaboration between specialists in machine learning and historians, the EXO-POPP

project will develop a database of 300,000 marriage certificates from Paris and its suburbs

between 1880 and 1940. These marriage certificates provide a wealth of information about the

bride and groom, their parents, and their marriage witnesses, that will be analyzed from a host of

new angles made possible by the new dataset. These studies of marriage, divorce, kinship, and

social networks covering a span 60 years will also intersect with transversal issues such as gender,

class, and origin. The geolocation of data will provide a rare opportunity to work on places and

relocations within the city, and linkage with two other databases will make it possible to follow

people from birth to death.

Building such a database by hand would take at least 50,000 hours of work. But, thanks to the

recent developments in deep learning and machine learning, it is now possible to build huge

databases with automated reading systems including handwriting recognition and natural

language understanding. Indeed, because of these recent advances, optical printed named entity

recognition (OP-NER) is now performing very well. On the other hand, while handwriting

recognition by machine has become a reality, also thanks to deep learning, optical handwritten

named entity recognition (OH-NER) has not received much attention. OH-NER is expected to

achieve promising results on handwritten marriage certificates dating from 1880 to 1923. This

project research questions will focus on the best strategies for word disambiguation for

handwritten named entity recognition. We will explore end-to-end deep learning architectures for

OH-NER, writer adaptation of the recognition system, and named entity disambiguation by

exploiting the French mortality database (INSEE) and the French POPP database. An additional

benefit of this study is that a unique and very large dataset of handwritten material for named

entity recognition will be built.Laboratoire LITIS, EA 4108, Université de Rouen, 76 800 Saint-Etienne du Rouvray, FRANCE

Téléphone : (33) 2 32 95 50 13 Fax : (33) 2 32 95 50 22 Email : Thierry.Paquet@univ-rouen.fr

Missions

The research engineer will be in charge of the development of a processing pipeline dedicated

to optical printed named entity recognition (OP-NER). He will closely collaborate with a Ph.D.

student in charge of Handwritten Named Entity Recognition (OH-NER).

OP-NER is the project’s easiest task and will benefit from the latest results achieved by the LITIS

team on similar problems on financial yearbooks. Images are first processed to extract every text

information. This will be achieved with the DAN architecture designed by LITIS which is a deep-

learning-based OCR (https://arxiv.org/abs/2203.12273). The research engineer will be in charge of

this OCR task. A benchmark of DAN against available OCR software such as Tesseract and EasyOCR

will also be conducted. Then the textual transcriptions will be processed for named entity

extraction and recognition. Named entity recognition is a well-defined task in the natural language

processing community. In the EXO-POPP context however, we need to define each entity to be

extracted more precisely to make a clear distinction between the different people occurring in the

text. For example, we need to distinguish between wife and husband names, and similarly for the

parents of the husband and of the wife, and so on for the witnesses, children, etc. An estimation

of around 135 categories has been established. The TAG definition was made by LITIS as well as a

first training dataset. Manually tagging the transcriptions has been made possible through the

PIVAN web-based collaborative interface (https://litis-exopopp.univ-rouen.fr/collection/12 ). This

platform provides in one single web interface a document image viewer, viewing and editing of

OCR results and text tagging facilities for NER. PIVAN eases the annotation efforts of the H&SS

trainees and allows for building the large, annotated datasets required for machine learning

algorithms to run optimally. The research engineer will oversee datasets generation and curation

as per the requirement of the EXO-POPP NER task, including the handwritten datasets.

The named entity recognition task will be based on a state-of-the-art machine learning approach.

We have started some experimentations with the well-known FLAIR NER library

(https://github.com/flairNLP/flair). We plan to continue developing and tuning the EXO-POPP

named entity recognition module using this library. The research engineer will oversee this task

entirely.

Tasks

• The research engineer will be in charge of tuning PIVAN for the OCR task. A benchmark of

DAN with the available OCR technologies such as Tesseract and EasyOCR will also be

conducted.

• The research engineer will be in charge of datasets generation and curation as per the

requirement of the EXO-POPP NER task, including the handwritten datasets.

• The research engineer will be in charge of developing the NER module.Laboratoire LITIS, EA 4108, Université de Rouen, 76 800 Saint-Etienne du Rouvray, FRANCE

Téléphone : (33) 2 32 95 50 13 Fax : (33) 2 32 95 50 22 Email : Thierry.Paquet@univ-rouen.fr

Deliverables:

Transcription of the typescript corpus

Named entities extracted from the typescript corpus

Skills :

• General software development and engineering, Python

• Machine Learning, Computer vision, Natural Language Processing

• Ability to work in a team, curious and rigorous spirit

• Knowledge in web-based programming is a plus

Position to be filled :

Positions: 1 Research Engineer

Time commitment: Full-time

Duration of the contract: September 1st 2022 – 31st August 2023

Contact: Prof. Thierry Paquet, Thierry.Paquet@univ-rouen.fr

Indicative salary: €24 000 annual net salary, plus French social security benefits

Location: LITIS, Campus du Madrillet, Faculty of science, Saint Etienne du Rouvray, France